Scaling CRISPR RNA screening

Using powerful deep learning methods to model Cas13 functionality

Introduction

CRISPR is a revolutionary tool for life scientists, and I have already spent some time writing about its importance in this newsletter. Due to the fundamental programmability of the system, it is now possible for scientists and engineers to make arbitrary edits in DNA sequences. One of the key early applications of this new ability has been to carry out large-scale DNA-targeting CRISPR screens to empirically assess the function of genes in different experimental contexts. There are already robust commercial offerings that commoditize this type of technology.

While the toolbox for genome engineering with CRISPR is rapidly expanding, DNA is not the only target of interest inside of cells. What about the prospect of transcriptome engineering? For many questions in modern genomics, scientists are interested in understanding which RNA transcripts are present in cells at specific times and locations to better understand which gene expression programs drive behaviors such as tissue-specific cell differentiation or disease progression. CRISPR systems that target RNA offer the potential of a programmable technology for perturbing specific transcripts in these systems and observing the outcomes.

The CRISPR-Cas9 system is widely used for making DNA edits. Instead of Cas9, the Cas13 family of enzymes can be used to directly target RNA for editing. Because CRISPR is programmed by a guide RNA sequence and is a highly efficient editor, the CRISPR-Cas13 system holds the promise of overcoming some of the challenges that other RNA targeting systems face. Before this system can be used at scale, it needs to be better characterized in order to be able to efficiently and accurately program it with effective guide sequences for any desired RNA target.

In an effort to scale this technology and make programmable transcriptome engineering a reality, the Hsu Lab at UC Berkeley and the Konermann Lab at Stanford collaborated on an ambitious project to characterize Cas13 behavior. This collaboration was particularly exciting because as a graduate student Patrick Hsu was one of the early contributors to the development of the CRISPR-Cas9 system with Feng Zhang, and Silvana Konermann has also been a pioneer in this field, including being the lead author of the paper describing the CasRx system.

Today, I’m highlighting a preprint from this collaboration entitled “Deep learning of Cas13 guide activity from high-throughput gene essentiality screening” which was led by Stanford Bioengineering graduate student Jingyi Wei. This paper combines state-of-the-art deep learning with a clever experimental strategy to characterize Cas13 behavior, opening the door to large-scale guide design.

Key Advances

In this study, the specific Cas system in question was the Cas13d-NLS from R. flavefaciens strain called CasRx which is a “highly compact RNA targeting effector with robust activity in mammalian and plant cells.” Given this enzyme, what is the best way to characterize its behavior?

One useful abstraction for thinking about this problem could be to think of it as a simple function: Cas13(guide_seq) = edit. We want to understand the functional mapping between a guide RNA used to program CRISPR-CasRx and the edit that it will make. With this goal, a viable strategy could be to use machine learning, or specifically deep learning to discover this relationship. The beauty of deep learning is that it is an enormously powerful new programming paradigm that lets us approximate these types of functions directly from data.

Given a sufficient number of photos and their corresponding labels, deep learning can be used to learn the functional mapping between the two. Beyond building state-of-the-art classifiers of photos of cats, deep learning has already had extraordinary success on hard problems in science. Given data on amino acid sequences and the structures that they fold into, deep learning was used to effectively solve the protein folding problem. This general strategy leads to another question: what data can we use to characterize CasRx behavior?

The approach used in this study was to perform a large scale gene essentiality screen. The group “designed a library of >127,000 guide RNAs tiling 55 essential human transcripts with single-nucleotide resolution and assayed the effect of each guide on cell proliferation.” The beauty of this setup is that because the genes being targeted are essential, you get a clear read out of whether or not a given guide was effective based on whether or not the cells grow (proliferate).1

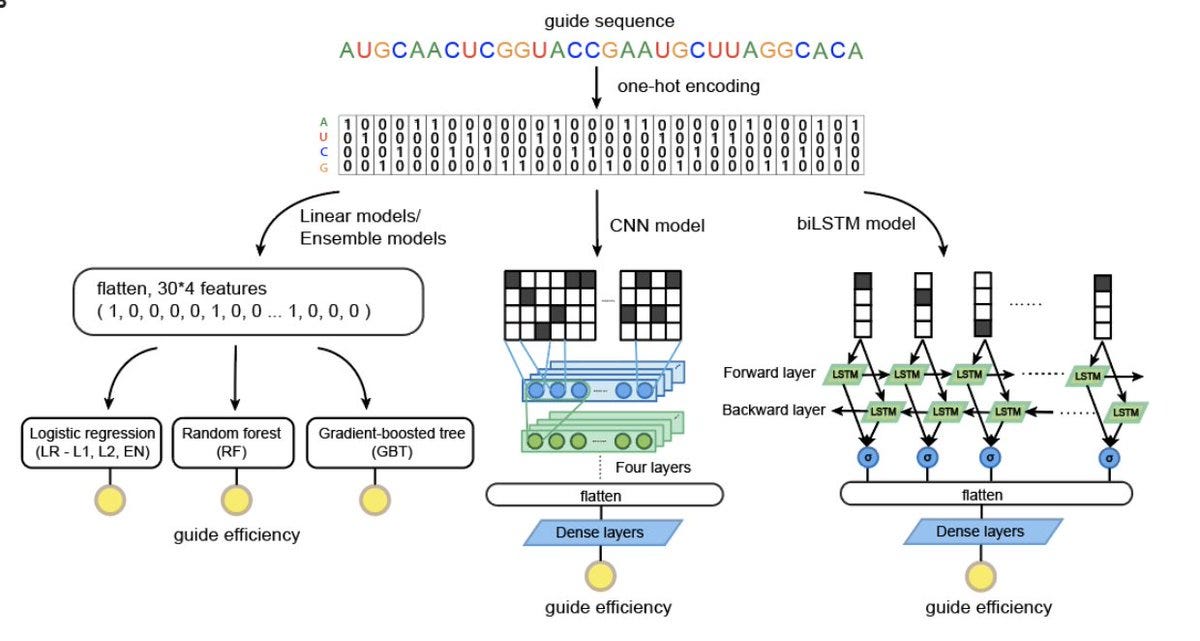

With the data generated by the screen, the authors used a convolutional neural network (CNN) to predict the effectiveness of guide sequences. They also used used a variety of model interpretability techniques to discover the underlying biological principles that the neural network was learning in order to make predictions.2

Results

In order for deep learning to be effective, it is essential for there to actually be a mapping between the desired inputs and outputs. It is a software paradigm, not magic, and can only approximate a function if it is represented in the data. Thankfully, for CasRx, looking at the data showed an interesting relationship between guides and effective edits:

The most effective guides clustered together in positions along the transcript targets in a highly non-random way. This is really crucial, because this pattern means that there is likely an underlying sequence basis for the behavior of CasRx. If that could be learned, it could be used to efficiently design effective guides for large-scale phenotypic screening.

The authors tested a variety of machine learning models for uncovering the relationship between guide sequence and guide efficiency. They ultimately used a CNN. While they saw good results using just the one-hot encoded sequences, they were able to see performance improvements when also adding features about the predicted secondary structure of the guide RNAs. The final model had impressive performance:

Validation against an orthogonal dataset based on cell surface protein knockdown successfully predicted effective guides with 95% accuracy across the top 10 selected guides for each gene.

This paper is also jam-packed with results describing the motifs and sequence features discovered from the model interpretability techniques. While I’m not going to go through them here, they are worth exploring in the preprint.

One other important result from this work is the creation of rnatargeting.org, which provides free web access to pre-computed guide designs across all human and mouse RefSeq genes, and an option to design new guides for custom input sequences. Providing this kind of resource makes it far more tangible for other researchers to use the outputs of these new models for their own experiments.

Final thoughts

This preprint combined a well-designed experimental screen with deep learning to decipher the rules governing which CasRx guide sequences are the most effective. With these models, the researchers established a community resource for large-scale phenotypic screening with CRISPR. This expands on the versatility of CRISPR, expanding its potential as a tool for transcriptome engineering in addition to genome engineering.

More generally, this paper is a great example of the interplay between powerful experimental and computational technologies. Oligo libraries enable the generation of empirical data for a large number of guides. This data can serve as the foundation for powerful unbiased models to learn complex biological functions. More and more, a new generation of scientists are blending these skillsets and tools, and pushing the boundary of what is possible.

Thanks for reading this highlight of “Deep learning of Cas13 guide activity from high-throughput gene essentiality screening”. If you’ve enjoyed reading this and would be interested in getting more highlights of open-access papers in your inbox, you should consider subscribing:

That’s all for now, have a great Wednesday! 🧬

One thing worth noting in the schematic for this screen is the importance of the ability to synthesize a large library of guides using oligos. Oligo libraries will continue to come up time after time in the new technologies that I highlight. They have been as important in some ways as the incredible improvement in sequencing technologies. We can now read, and write DNA, and both technologies will continue to improve.

If you’re interested in deep learning interpretability for genomics, I’ve written a post about a new technique in this field, where I also provide some background on this type of research and why I think it is very promising.