Gassing the Miracle Machine

A deep dive on recent experiments in applied metascience

Welcome to The Century of Biology! You can subscribe for free to learn about data, companies, and ideas from the frontier of biology:

Enjoy! 🧬

When I first started writing this newsletter, I focused entirely on writing short highlights of bioRxiv preprints. I assumed that other academics would enjoy quick reads covering new work. As I’ve written about before, this project has started to take on a life of its own, and is growing in a way that I had never expected.

Before diving into today’s piece, I want to quickly share some thoughts on where I want to take this newsletter next.

First, I know that I want to continue to communicate cutting-edge science in an accessible way. I hope that my essays about new scientific results are of use to scientists—maybe even from adjacent fields learning about new areas—but also to engineers, investors, and generally curious people excited about the incredible progress in the life sciences.

From this foundational reading and writing, I often get kernels of new ideas about broader trends in biotechnology. I’ve enjoyed sharing some of these ideas through more in-depth posts like the Sequencing, Synthesis, Scale, Software series.

Through my collaboration with Packy, I’ve continued to grow as a writer. I’ve enjoyed writing deeper dives on some of the topics I’m the most excited about. Writing about Ginkgo was a great learning experience, and it certainly won’t be the last deep dive on a company that I write. Doing some Techbio taxonomy was a valuable exercise.

These different types of posts no longer quite fit the cadence of a weekly newsletter. I’ve found the weekly cadence to be a great fit for writing about primary science results. For more in-depth analyses that require substantial research, posting each week is restrictive. For this reason, my cadence will fluctuate. I probably won’t ever post more than one new essay a week, or less than one essay a month.

Today’s post is an example of something that wouldn’t have been possible in the timeframe of a week. I’ve teamed up with Jocelynn Pearl to explore a foundational topic in metascience: scientific funding. How do we finance and structure the process of scientific discovery and translation? What are the ways that we can improve and accelerate this process to build an abundant future?

Let’s jump in! 🧬

Gassing the Miracle Machine

By Elliot Hershberg & Jocelynn Pearl

Intro

Science is a foundational instrument for driving human progress.

It is the system we’ve constructed for arriving at detailed explanations of objective reality that are hard to vary. These types of explanations require coherent models that account for empirical observations. The only way to arrive at these types of explanations is to do the challenging experimental and theoretical work necessary to ensure all of the details of the explanation play a functional role and are closely coupled to objective reality. Explanations of this nature are a central part of how we moved from mythology to physics, and from caves to skyscrapers. The physicist David Deutsch argues that this is the core idea of the Scientific Revolution, “ever since which our knowledge of the physical world and of how to adapt it to our wishes has been growing relentlessly.”

The guiding light of scientific discovery is one of our most precious resources, and must be stewarded with care. Beyond developing new explanations, we have established an intricate human system to convert new knowledge into the inventions that power the modern world. Studying and improving this system is essential—which requires interfacing with countless complex human systems. As the visionary Vannevar Bush argued, “We need better understanding of the whole complex affair, on the part of legislators, the courts, and the public. There will be no lack of inventions; genuine inventors just can’t help inventing. But we want more successful ones, and to get them requires better understanding.”

Guided by his mission to accelerate scientific progress, Vannevar Bush led efforts to expand the U.S. research funding system into what it is today. This powerful system is what Eric Lander, scientist and former director of the Office of Science and Technology Policy, calls the Miracle Machine.

Our systematic efforts in funding basic science have ultimately led to miracles like the Internet, artificial intelligence, cancer immunotherapies, and gene editing technologies like CRISPR. While the results to date have been miraculous, the machine doesn’t run itself: maintaining the system is absolutely critical.

Over time, though, we’ve become complacent in our maintenance of this machine.

Lander coined this term when passionately arguing for an increase to our federal research budget which has actually been decreasing in recent years. Over time, “adjusted for inflation, the budget for the National Institutes of Health, the federal medical research agency, has fallen since 2003 by nearly 25 percent.” The challenge of funding science isn't purely about advocating for a larger budget. Our actual funding mechanisms have become increasingly sclerotic, inefficient, and driven by consensus. A US Government study estimated that professors now “spend about 40 percent of their research time navigating the bureaucratic labyrinth” that is necessary to fund their labs. In another alarming survey, 78% of researchers said they would change their research program ‘a lot’ if given unconstrained funding. Young scientists also face serious bottlenecks to getting funded early in their careers, despite this being potentially the more productive and groundbreaking period in their lives.

Beyond lab funding, there are serious structural bottlenecks in the way that we translate scientific discoveries into new medicines and products. This is what former NIH Director Elias Zerhouni called the Valley of Death. In biotech, company creation has lagged in recent years. The physician-scientist Eric Topol recently pointed out that although we’ve made profound advances in understanding the human genome, this knowledge hasn’t yet been made actionable in the clinic.

Any optimist and advocate of human progress should view the health and efficiency of our Miracle Machine to be of central importance, and we are clearly operating far from our maximum capacity.

So, what do we do?

Challenges and inefficiencies represent new opportunities. In recent years, there has been an explosion of innovation in scientific funding mechanisms. Metascience—the study of science itself—has become an applied discipline. Will the near-future Miracle Machine be a modernization of our current systems or something entirely new? Where and how will the next several leaps in scientific progress occur? These are central questions for nearly all types of innovation. To quote R. Buckminster Fuller, “You never change things by fighting the existing reality. To change something, build a new model that makes the existing model obsolete.”

When analyzing complex human systems with many layers of incentives, it is often surprisingly good advice to follow the money.

The goal of our exploration here is to better understand how we currently Gas the Miracle Machine. How do we actually fund scientific innovation and commercialization? From there, we are going to look at the ideas, technologies, and projects that seek to transform this process.

Let’s dive into some of the innovations in scientific funding in recent years, from private capital to crypto to building entirely new focused research institutes taking aim at the unknowns in our scientific understanding.

We’re going to explore:

A 30,000 foot view of current scientific funding

The Killer Web3 Use Case

Faster Gas, Faster Grants

Going full Bucky (building from the ground up)

A 30,000 foot view of current scientific funding

How is the current Miracle Machine actually structured?

Almost all scientific disciplines fall into roughly three categories of organization:

Academic institutions (universities, non-profit research institutes, etc.)

Startups

Corporations (mature companies with R&D labs)

Let’s make this more concrete by looking at how biomedicine works. With an annual budget of around $45 billion, the National Institutes of Health (NIH) is the 800-pound gorilla of biomedical research funding. Other institutes like the National Science Foundation, which has an annual budget of roughly $8 billion, are also key funding bodies. These large governmental agencies dole out money to Principal Investigators (PIs) who apply for it through a variety of different grant mechanisms. The PIs are typically professors at research universities or medical schools who run labs. The actual research is carried out by grad students, temporary postdoctoral scholars (postdocs), and some professional staff, while the PI serves as the manager.

This hierarchical funding and organizational structure isn’t the only way that we’ve done laboratory science. The brilliant chemist and microbiologist Louis Pasteur (after whom pasteurization is named) painstakingly carried out many of his own experiments (above) with the help of laboratory assistants. This was actually a crucial part of his process: he trained himself to have a “prepared mind” to notice even subtle results in his experiments. Now, it’s become a universal joke to “watch out when the PI is in the lab” because of their rusty experimental skills.

It’s hard to pin down when the transition to the modern lab system took place, but a key inflection point was the second World War. Given the importance of the Manhattan Project in the war efforts, the funding of science experienced an important transition: it was no longer just about supporting intellectual pursuits—science funding had direct consequences for national security and economic growth. These ideas are best encapsulated in the report entitled Science - The Endless Frontier by Vannevar Bush in 1945.

In subsequent years, many of our current scientific and biomedical research institutions came into existence. The number of U.S. medical schools has doubled since World War II. The number of faculty positions increased by 400% between 1945 and 1965. Science was no longer a solitary intellectual vocation, it was increasingly a team enterprise funded by government grants. This has generally been referred to as the increasing “bureaucratization of science.”

So, the first major gear in the Miracle Machine is government-funded research labs.

Labs are responsible for building out the foundational explanations of the world that make it possible to transform it. The commercialization of science is done through spinout companies that incorporate around specific intellectual property (IP) that has potential for translation. These spinouts are financed by VCs, who are in turn primarily financed by Limited Partners (LPs). LPs are institutions such as university endowments, pension funds, and family offices.

This is the second gear of the Miracle Machine: startups and university spinouts backed by private capital.

Biotech startups primarily focus on scaling and expanding the initial science they incorporate around, and working through the challenging and lengthy process of getting new drugs approved. The journey doesn’t end at approval. Drugs have to be manufactured, marketed, and sold around the world. This leg of the journey is done by pharmaceutical companies, many of which are massive global corporations that have been around for over a century, in some cases even predating the Food and Drug Administration (FDA), which oversees drug approval. Instead of making their own drugs, pharma companies primarily buy assets from biotech companies, which often involves acquiring the entire company.

Massive R&D corporations like Big Pharma are the third major gear of our current Miracle Machine.

This machine genuinely has produced miracles.

The story of Genentech is just one example. Pioneering academic work done at Stanford was spun out into a venture-backed company. This company managed to use genetic engineering to convert bacterial cells into microscopic Insulin producing factories—dramatically reducing the shortage of an important medicine. In 2009, Genentech merged with the Swiss pharma giant Roche in a $47 billion dollar deal that offered the promise of global scale.

The story hasn’t stopped there. Breakthroughs like cell-based therapies and CRISPR gene editing are still making their way from academic labs into the clinic. New theories and models are still being developed in academic labs, and companies are still being formed and financed based on the most promising advances. Pharma is still acting as a major global purchaser and distributor. The system has reached a sort of stable equilibrium between its various actors.

While the Miracle Machine has changed our world for the better, systemic challenges have emerged over time. Our goal in laying out this bird’s eye view of the current system is to make it easier to understand some of its problems and to have the context to understand new projects seeking to tackle them.

Large funding bodies like the NIH have become increasingly bureaucratic over time, with an inherent bias towards funding more conservative and incremental work. We’re pretty sure nobody really thinks that scientists should spend up to 40% of their time slogging through dense government paperwork. As the funding process becomes increasingly complex and driven by committees, it becomes harder for new and promising research directions to gain traction.

The NIH has also developed an affinity for “Big Science” projects where large study sections are assembled to fund projects outside the scope of what individual labs can accomplish. While in principle this seems important, these types of projects have produced mixed results, and require consuming resources that would otherwise be used to fund labs focused on basic discovery science. As the Berkeley biologist Michael Eisen has argued, “big biology is not a boon for individual discovery-driven science. Ironically, and tragically, it is emerging as the greatest threat to its continued existence.”

Large-scale structural changes to government research funding have shaped and constrained the types of scientific problems that researchers can pursue. The baton pass between universities and startups has also become more complex. At the translation stage, terms for university spinouts have been shown to vary widely, in some cases crippling companies before they even get off the ground. Universities are strongly incentivized to close guard their IP, which can lead to worse terms for the scientists producing the work, and can even result in terms unfavorable enough to cause investors to lose interest in financing translation efforts.

Governmental agencies aren’t the only part of the system with funding blindspots. Venture capitalists are inherently restrained in what they can finance—companies must have the potential to become massive $1B+ exits for the math to make enough sense for them to invest. Not all technologies or public goods generate these types of returns, especially on the time scales investors are constrained to operate within. A very narrow segment of society has the opportunity to generate real wealth from backing these private investments as accredited investors, further accelerating inequality.

Pharmaceutical companies are similarly constrained by their financial structure and incentives. The clear incentive is to develop or acquire drugs with the largest possible market, while minimizing R&D costs. This skews the entire pipeline in suboptimal ways, which has real consequences: “there are few, if any, products in the pipeline to address anti-microbial resistance, tuberculosis, and opioid dependency despite the significant unmet need and disease burden. In contrast, many new products are new versions of existing products that offer modest changes to the incumbent drug.”

So where should we expect to look for new ideas and approaches?

It is unlikely that radical solutions will come from the leaders of our current institutions, who are incentivized to perpetuate the system they operate within. One interesting place to look for new ideas is to explore what creative scientists are pursuing for their side projects. As Paul Graham has said about great startup ideas, “the very best ideas almost have to start a side project because they're always such outliers, that your conscious mind would really reject them as ideas for companies.”

When taking this approach, it’s difficult to ignore the steady expansion of activity in the Decentralized Science community.

The Killer Web3 Use Case

Personally, my (Elliot here) initial attitude towards web3 was highly skeptical. As a scientist and engineer, one of my core focus areas has been to use the awesome power of web2 technologies—efficient central databases, fast servers, powerful modern browsers—to build cutting-edge research tools for scientists. Measured and technical assessments such as Moxie Marlinspike’s first impressions of web3 have served as my foundation for thinking about the space.

But over time, I’ve become a cautious optimist—ironically right around the start of the crypto market crash and the increase in web3 skepticism. Why? As I’ve talked with smart people like Packy, Jocelynn, and some of the leading founders in this area, I’ve become excited about what this new set of protocols, tools, and ideas might excel at. We’re observing important social experiments that are attempting to establish new modes of collaboration and organization. From my direct experience in academic science, I know that our research institutions can stand to benefit from changes to the status quo.

Not Boring readers are likely familiar with the giant Web3 Use Cases debate that our fearless leader Packy has found himself in recently. One tangible strength of Web3 is that it offers a new set of tools for creating financial instruments. As Michael Nielsen has pointed out, “new financial instruments can, in turn, be used to create new markets and to enable new forms of collective human behaviour.”

What if one of the killer applications of this new tool stack is to radically improve the scientific funding process?

As we highlighted so far, scientific funding once fell into approximately two buckets: public or private financing. Once crypto investors start to generate serious wealth, a third bucket emerged, and many of these new investors want to put their money to good use.

This alone is worth briefly reflecting on. The expansion of crypto has created a new type of billionaire, with heavy selection towards those who were willing to be early adopters of an entirely new financial system. As Tyler Cowen has argued, this could transform philanthropy, because these new technical elite will have a more substantial appetite for “weird, stand-alone projects.” We are already seeing this dynamic play out, with major investments in projects in longevity science from both Vitalik Buterin and Brian Armstrong.

The difference isn’t purely isolated to the birth of a new class of younger and more technical investors and philanthropists. Web3 technologies are being used to amplify the focus on funding new and weird scientific projects. Today, new funding mechanisms including token sales and crowdfunding backed by crypto are ushering in an entirely new way to finance projects.

Crowdfunding has traditionally been challenging for scientific research, but crypto crowdfunding might be changing that. A new set of open protocols and tools have emerged that are geared towards scaling the funding of public goods. One example is Gitcoin, is an organization whose mission is to build and fund public goods. Each quarter they conduct a round of crowdfunding backed by big donors like Vitalik Buterin. The fun innovation here is that grants are quadratically matched - meaning that the number of donors has a bigger impact for matching than the amount donated. In the latest GR15 grant round, Decentralized Science (DeSci) was included as one of four impact categories—again highlighting the growing interest in scientific research in the Web3 space.

The DeSci round received donations from 2,309 unique contributors, backing 83 projects and raising a total of $567,983. An interesting pool of big donors provided the funds for matching donations; among them, Vitalik Buterin (co-founder of Ethereum), Stefan George (co-founder and CTO of Gnosis), Protocol Labs, and… Springer Nature.

Science communities are borrowing another blockchain technology innovation: Decentralized Autonomous Organizations (DAOs).

As Packy has previously described, DAOs are an innovation in web3 governance. A DAO runs “on a blockchain and gives decision-making power to stakeholders instead of executives or board members.” They are “autonomous” in that they rely on a software protocol, recorded on the publicly accessible blockchain, and “trigger an action if certain conditions are met, without the need for human intervention.”

Just like the case of Gitcoin and quadratic funding, one of the most exciting early use cases for DAOs has been to accelerate scientific community building and funding. There’s been a kind of Cambrian explosion with regards to science DAOs over the past year. Here’s a snapshot of some of the DAOs and projects in the space:

If we think of traditional science as a ‘top down approach’ that happens within established and highly centralized university hubs, science DAOs demonstrate an upswing in ‘bottom up’ scientific development. Many of the communities shown in this landscape formed when groups of people adopted a shared goal - to advance research around agriculture or hair loss (for instance). And these aren’t just Reddit-esque forums for discussion; most DAOs contain specialized working groups, often mixing experts with amateur scientists to work on tasks such as new literature reviews for their area of interest or assess projects for funding.

One of the initial promises of DeSci is the democratization of funding access; essentially, that research that would otherwise not get funded, is now being funded. But is that true in the projects that have received funding from communities like VitaDAO’s deal flow group? Among the projects listed as funded on their website, grants of around $200-300K have been allocated to several investigators at universities.

Are the investigators receiving funding from VitaDAO that different from those receiving traditional NIH funding? In the case of Dr. Evandro Fang, whose project studying novel mitophagy activators recently received a $300K investment from VitaDAO, his work has been funded by several NIH and other government grants per his CV. Another argument for the novelty of the VitaDAO approach is that the speed with which these grants get reviewed and funded by their community is faster than that of NIH, for instance, even if the recipients have a high degree of overlap.

So far, crowdfunding projects like Gitcoin and organizations like VitaDAO in the DeSci community have set their sights on accelerating and simplifying the process of funding basic research. Other projects have begun to take aim at the shortcomings of the biopharma industry that we’ve highlighted, such as rare disease drug development.

Another early selling point for the DeSci space is that it could advance therapies for underserved populations of patients, such as those suffering from ultra-rare disorders. Traditional biotechs don’t typically pursue drug development for smaller populations of patients because they don’t stand to make enough money from an eventual product to justify the steep costs of clinical R&D. But decentralized and global teams are advancing identification of repurposed drugs for rare disease patients. Examples include Perlara and Phage Directory, neither of which relies on blockchain technology but certainly supports the thesis that knowledge from a decentralized network can advance cures.

As for organizations on the blockchain, Vibe Bio is a new company that’s embracing web3 as a way to find “every cure for every community.” Vibe founders Alok Tayi and Joshua Forman plan to build a web3 protocol for setting up patient community DAOs that can co-own and manage their drug development. It’s an exciting innovation in a space where patient communities have been self-organizing for decades, but where corporations typically owned the data and assets. This poses a risk to the patient foundations often seeding the science. These same companies can choose to shelve these projects, as was the case with a recent Leigh Syndrome program at Taysha Gene Therapies.

Vibe recently raised $12M from traditional VCs, including Not Boring Capital; a positive signal that connecting patient communities through DAOs could make for a lucrative process for drug development of rare disease cures. Founder Alok Tayi was inspired to start Vibe after his daughter was born with a disease that lacked a cure. In an interview for the Not Boring podcast, Tayi offered the following when asked “why web3?”

Our ambition was…to create the infrastructure approach by which we could tackle potentially all of diseases that are neglected and overlooked. And so what it required us to do is first think about the technological and governance solution that allowed us to have infinite scalability of participation, but then also to a completely new source of capital that was interested in taking big swings and accomplishing big things.

Biotech venture constraints…pushes them to slightly more conservative investments as opposed to a broader aperture of disease. The other facet I'll also highlight here is that when you look at the other approaches by which someone could do this whether it be a charity, an academic institution or even a C Corp or LLC…there ends up becoming inherent limitations in terms of the quantity of capital, the types of expertise, as well as the quantity of owners and participants you can actually have in the process. And so again, our ambition at Vibe, our mission is to find every cure for every community and not just the 250 who are accredited investors, or qualified purchasers that are allowed to participate in these sorts of traditional type institutions.

Beyond crypto grants and DAOs, there are many additional, novel ideas being explored for ways to apply tokenomics to science and improve some of its pitfalls. Among these strategies are IP-NFTs; essentially intellectual property tied to a non-fungible token. The first proof of concept of this for a biopharma asset was initiated by a company called Molecule. Their hope is to create an ‘open bazaar for drug development’.

The merge of web3 with science is incredibly nascent; time will tell how these new experiments in funding, ownership and organization of science will play out. We’re optimistic that even if blockchain isn’t the answer to the crises in the scientific ecosystem, at least it has refreshed the conversation around what needs to be fixed and started to distribute this new form of liquidity to one of the best use cases.

Faster Gas, Fast Grants

The experiments in Decentralized Science have shown that the Web3 community has a substantial appetite for funding scientific research and commercial translation. This shouldn’t be taken lightly. While the NIH has a $50B annual budget, it continually requires profound political hoop jumping to try to convince American taxpayers to increase the size and scope of science spending. With this major difference in enthusiasm, it’s entirely possible to imagine a world in which the $1T crypto market outspends the U.S. government on scientific funding.

Outside of crypto, tech philanthropists have also taken aim at some of the major inefficiencies in our modern scientific funding system. One prominent example is how emergency funding was deployed during the pandemic. Even when faced with a global emergency, the NIH displayed an inability to deviate from its rigid funding structure:

In order to more rapidly deploy funding, the Fast Grants project was born. Initiated by Emergent Ventures and backed by a list of prominent tech leaders including Elon Musk, Paul Graham, and the Collison Brothers, the project aimed to dramatically reduce the time it took for important COVID-19 related research projects to get off the ground. Their argument was simple: “Science funding mechanisms are too slow in normal times and may be much too slow during the COVID-19 pandemic. Fast Grants are an effort to correct this.”

There’s an important lesson here that requires reflecting back on our mental model of how the NIH even came into being in the first place. As we’ve seen so far, our current funding systems were primarily architected by the visionary Vannevar Bush, a key member of the National Defense Research Committee (NDRC) that delivered rapid results during World War II. Part of the Fast Grants mission was to enable a return to a system capable of the type of efficiency that Bush himself advocated for. In his memoir, Bush recalled that “within a week NDRC could review the project. The next day the director could authorize, the business office could send out a letter of intent, and the actual work could start.”

The program was initiated to speed up research to understand COVID-19 during the global pandemic, but the model seems to have traction beyond this use case. In an article for Future, Tyler Cowen, Patrick Hsu, and Patrick Collison reflected on some of the results from the project:

We expected to receive at most a few hundred applications. Within a week, however, we had 4,000 serious applications, with virtually no spam. Within a few days, we started to distribute millions of dollars of grants, and, over the course of 2020, we raised over $50 million and made over 260 grants. All of this was done at a cost of less than 3% Mercatus overhead, thanks in part to infrastructure assembled for Emergent Ventures, which was also designed to make speedy and efficient (non-biomedical) grants.

Incredibly, accepted grants received funding within 48 hours. A second round of funding would follow within two weeks. Recipients were required to publish open access and to share one-paragraph monthly updates.

Among the intriguing findings, many of the applicants came from top universities, a cohort the organizers assumed were already well-supported by traditional NIH-style grants. And 64% of the grantees surveyed said that the research would not have happened without a Fast Grant. Again from Collison, Cowen and Hsu:

Fast Grants pursued low-hanging fruit and picked the most obvious bets. What was unusual about it was not any cleverness in coming up with smart things to fund, but just finding a mechanism for actually doing so. To us, this suggests that there are probably too few smart administrators in mainstream institutions trusted with flexible budgets that can be rapidly allocated without triggering significant red tape or committee-driven consensus.

Fast grants are a method being pursued by multiple organizations. Among them are Impetus Grants for longevity research, founded and led by 22-year-old Thiel Fellow Lada Nuzhna. The initial round funded 98 grants with the goal of accelerating research into biomarkers for aging, understanding mechanisms of aging, and improving the translation of research into the clinic. While one of the stated goals of the program is to fund research that would otherwise be missed by traditional sources, the list of grantees includes several established longevity researchers, and the acceptance rate was actually more stringent than that of NIH (15% for Impetus Grants vs ~20% from NIH). It’s worth noting one valuable aspect of this type of experimentation: it may drive the NIH to adopt and scale some of the most promising new strategies. The Rapid Acceleration of Diagnostics (RADx) was launched by the NIH around the same time that the Fast Grants got off the ground.

It will be interesting to compare, in the coming years, how fast grants might change the makeup of those who can carry out research and the types of outcomes delivered by these investigators. These different projects highlight two interesting trends.

First, in addition to crypto markets, a new generation of tech philanthropists has displayed a genuine interest in funding science in new ways.

Second, sometimes less is more.

As we explore new forms of funding, it’s worth recognizing that grant writing should be secondary to actually carrying out the science.. Sometimes, the best solution is to rapidly evaluate and fund the most promising proposals and get out of the way of progress.

Going full Bucky (building from the ground up)

So far, we’ve painted a picture of the way that our current institutions operate in broad strokes, and have looked at the way that crypto markets, Web3 technologies, and tech philanthropists have already made contributions to the scientific funding landscape. We now live in a world where Vitalik Buterin is anchoring quadratic crowdfunding for science projects, and the Collison brothers are backing low overhead grant mechanisms to mitigate governmental inefficiencies. These new ideas are being explored to accelerate and expand the Miracle Machine in exciting and important ways.

With all of these new efforts, an interesting question emerges: what if some of the problems in scientific funding can’t be solved purely with new sources of capital or funding mechanisms?

Ultimately, our current scientific institutions represent a very small sampling of the full space of possible organizational structures. The Miracle Machine that we have is the byproduct of a very specific set of historical pressures and ideas. Some of the new funding ideas being explored today require the construction of an entirely new set of 21st century scientific institutions. In other words, they are taking Buckminster Fuller’s philosophy to heart and exploring new ways to fund and organize science from the ground up.

How are the new in real life (IRL) institutes being structured to solve the missing links in science?

One approach is focused research organizations (FROs), a new type of institute dedicated to solving a focused scientific challenge such as bluesky neurotech or longevity. Other focus areas proposed for FROs include identifying antibodies for every protein, AI for math, and developing super-resilient organ transplants. The central idea of the FRO model is that these types of scientific projects fall into a sort of institutional void. They are too capital intensive and team-oriented for academia, yet fall outside the scope of startups or corporations because they are more of a public good than a clear product with commercial value. FROs aim to fill that gap:

Convergent Research was co-founded by Adam Marblestone and Anastasia Gamick to spin up new FROs. This past spring, CR put on a metascience workshop, gathering together heads of institutes, policy makers from DC and the UK, and thought leaders in the form of writers and general changemakers in the metascience arena. The primary goal of the workshop was to brainstorm how new organizations could improve scientific progress.

A common theme among the presentations of the attendees was that something had gone awry with the scientific ecosystem. To summarize the working hypothesis: the predominant model of university-based research published in traditional scientific journals is creating a fragile ecosystem that needs disruption.

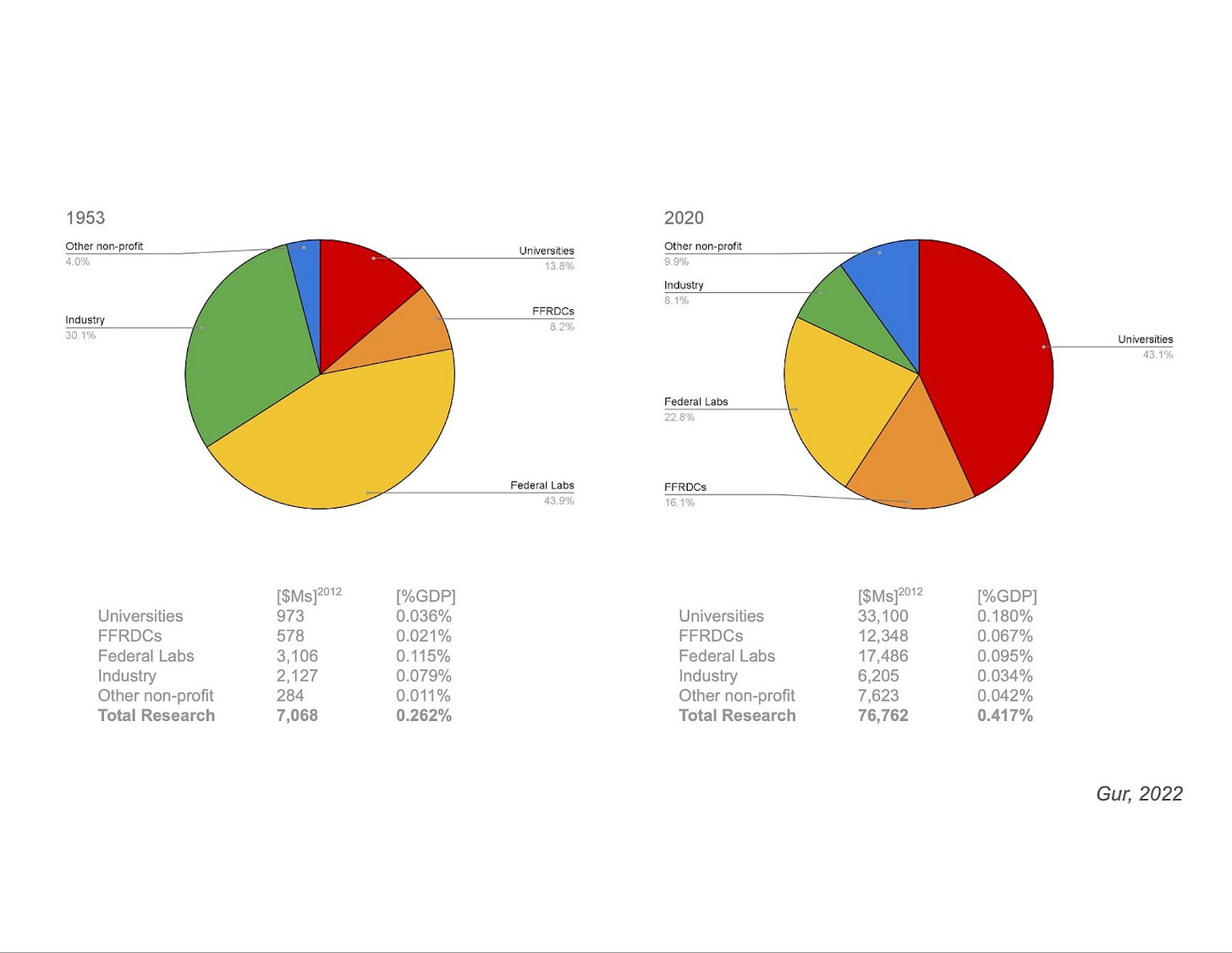

In one presentation by Ilan Gur, CEO of Aria Research, we were shown a pie chart for the distribution of research funding over time.

This graph shows something really interesting. The massive restructuring of scientific funding after the second World War that we alluded to earlier coincided with a major shift in the composition of our scientific institutions. Basic research funding in the United States has gone from primarily funding federal labs (1953, left pie chart), to primarily funding university-based research (2020, right pie chart). Could this shift to university-centric funding be to blame for some of the flaws in our current ecosystem?

In another presentation, we watched video clips of scientists speaking to the magic of setting at the Santa Fe Institute.

“The way that we did it at the Santa Fe Institute was to escape from society; build a community in the mountains, in the shadow of the atom bomb.” –David Krakauer, President, Santa Fe Institute.

The intimacy and aesthetic of this environment was intoxicating. The Santa Fe Institute represents a real departure from the conventional institutional structure of the research university—and as a result it has its own unique culture. It serves as a place for rebel scientists to pursue their boldest and most unique ideas. When watching the video, we wondered: how can we build more places like this? What would it take to design the space capable of fostering the next Feynmans or Einsteins of the world? How big were the teams? What was the leadership like?

Many of the metascience changemakers or rebel scientists are following the Buckminster Fuller Principle by building new institutions IRL.

Among the institutes leading the charge is Arcadia Science, led by Seemay Chou and Prachee Avasti. Arcadia is an applied experiment in metascience. The institute is structured as a research and development company, but primarily focuses on basic science and technology development. Part of the core thesis is that we have fundamentally misunderstood how valuable basic science is, especially if institutions are designed to help scientists efficiently translate their work into new products and technologies.

Along the way, Arcadia is experimenting with every part of their research process. For example, they are disrupting the status quo in the scientific publishing ecosystem by disallowing their scientists to publish in traditional journals; instead they publish journal-like articles on their website complete with links to project descriptions, data, comments and even Tweets. While this may seem like a subtle point, it is actually a very intentional departure from the bizarre dynamics and extractive nature of the existing academic publishing system. Experimenting with self-publishing may lead to improvements in how code, data, and results are shared with other scientists looking to build on the work.

Another interesting applied experiment in institution building is New Science. The organization is primarily the brainchild of writer and researcher Alexey Guzey, who spent a year putting together a classic blog post entitled How Life Sciences Actually Work exploring the realities of our current biomedical institutions. One of the major observations that struck Alexey was the lack of funding opportunities for young scientists:

Over time, an increasing percentage of scientific funding has gone towards supporting more (literally) senior scientists, making it harder for younger scientists to get initial funding for their labs. This graph doesn’t even tell the entire story: it only reflects the difficulties in obtaining funding for young professors. Young scientists pursuing their PhDs or postdoctoral fellowships have even less autonomy—they primarily work on the projects that their professor was able to gain funding for. While tech has dramatically expanded agency for young people—giving them a path towards founding, financing, and leading their own companies—young academics often aren’t able to truly develop or obtain funding for their own projects.

One of the core goals of New Science is to fill this niche. They have already rolled out a short fellowship program for young scientists to pursue their own ideas and projects. Over time, the plan is to create longer fellowships and ultimately independent institutes that put young scientists back in the driver’s seat of their own work:

Much like Arcadia, they will be pursuing a variety of applied metascience experiments along the way. For example, they are having fellows share essays about their ideas and work on their Substack—which you should seriously consider subscribing to. They are also funding more research and writing on how our current life sciences institutions actually work, like their massive report on the NIH, or Elliot’s essay on software funding in the life sciences.

One criticism of these new scientific institutions so far is that they have largely relied on the support of large donors, such as Eric Schmidt. Nadia Asparouhova has documented some of the ways in which the new tech elite have pursued philanthropy in the life sciences in recent years, and this shows no signs of slowing down. Beyond the Chan Zuckerburg Biohub, we’ve seen the launch of another tech-backed life sciences center in the announcement of the Arc Institute. There’s been some debate within the space of independent institutes as to the best type of financing—is a single donor better for an institute to have ultimate intellectual freedom, compared to having multiple donors where the desires and biases of the financiers might play out in the institute getting pulled in too many directions?

This question highlights a key philosophical difference between Decentralized Science and many of the new institutions that are emerging. The Decentralized Science movement is attempting to build new protocols and tools to give diffuse networks of scientists and technologists the power to more effectively organize and act. If there is a major funding gap, why build a FRO? Why not just build a new DAO and let the scientific community organically figure out how to solve the problem once they have the resources?

After several decades without substantial innovation in scientific funding or institution building, we are now seeing all of these experiments play out in parallel. As we’ve argued, science is one of the most valuable and productive enterprises that our species pursues, so there should be plenty of room at the table for new ideas and resources. Still, there will likely be some competition between approaches. As Nadia points out, “I’m particularly interested in watching the tension between tech- and crypto-native approaches unfold. While they are at different stages of maturity, at a high level, these are two major experiments playing out at the same time.”

Conclusion

Science is one the most powerful tools we have for making progress as a species. As Packy has argued, it is a fundamentally optimistic process: “to run experiments in order to better understand the universe assumes a belief that we can discover more than we already know, and use it to improve the world.” We have the profound fortune of living in a world that is explainable, and is capable of being transformed in new ways as our knowledge increases.

Due to the central role of scientific research in the second World War, U.S. leaders like Vannevar Bush architected a massive governmental machine to scale scientific funding at the national level. We are now living in a world powered by the miracles this machine has produced. There are several layers on top of our vast federal funding system that are necessary to ultimately produce products. Technologies need to be spun out of universities and receive additional private financing. These spinouts also need to interface with the set of larger R&D megacorporations that control various aspects of sales and commercialization.

While the Miracle Machine has earned its nickname many times over, we’ve highlighted several reasons why it is now necessary to experiment with new scientific systems. An increase in bureaucracy over time is practically a law of Nature, and the NIH is not an exception. Our most brilliant minds are now spending up to half of their time applying for complex government grants that can be rejected for minor issues with fonts. Over time, government funding has fixated on consensus-driven, conservative projects led by senior investigators.

An appetite for change has clearly become a part of the current Zeitgeist. We are now living through a Cambrian explosion of new funding and institution models for science. Our goal in this post has been to equip you with a mental model for how the current system operates, and to provide a field guide for further exploration of the many exciting applied experiments in metascience.

If you happen to believe that there are use cases for Web3 and we’ve managed to convince you that funding science is one of them, you should head to the DeSci Wiki and consider joining projects that excite you. If you’re a scientist looking for a faster way to get your project funded, we hope that the resources we’ve outlined on fast grants will be of use. If the notion of helping to build a new 21st century scientific institution sounds like it could be your life’s work, many of the projects we’ve mentioned are rapidly expanding and looking for both scientific and non-scientific contributors. Samuel Arbesman’s curated OverEdge Catalog offers a great starting point for a broad overview of new types of institutes.

One of the themes that we’re thinking about right now is the tension between centralization and decentralization. As Packy recently wrote, “the battle between centralization and decentralization is coming to a head in a bunch of areas, proto-Cold Wars on many fronts. Web2 vs. web3. Russia and China vs. the West. OpenAI vs. Open AI.” The story for science is no different. It will be fascinating to watch how these differing philosophies interface with each other over time. As Balaji has argued in The Network State, perhaps communities can digitally form in a decentralized style before building new systems in the physical world like new states, or in the case of Decentralized Science, perhaps new labs or institutes. In the reverse fashion, centralized institutes could adopt Web3 technologies and offer their skills and expertise as part of a more diffuse scientific network with new protocols and approaches to collaboration.

Whether they are experiments in new research institutes in physical labs or explorations in blockchain and new web labs, it's an exciting time to watch innovation unfolding in both organization and financing. We’re hopeful for the future and for the progress these ideas will bring about.

Thanks for reading this analysis of the Miracle Machine. You can subscribe for free to have the next post delivered to your inbox:

Until next time! 🧬

I am fascinated by the potential of Web3 to create a clean slate and new possibilities for funding patient-centered health services — DeHealth. While it’s a glimmer off in the distant future, this article sets the context for the possibilities. I hope future articles explore this more, and welcome opportunities to learn more.

Wow. That was quite the walloping with information. I knew that open science was just beginning to really get started but I wasn't aware of quite how many decentralized projects and funding styles had emerged already. Thank you for synthesizing this into such an actionable format. Highly useful work.